Introduction

In today’s rapidly evolving AI landscape, large language models (LLMs) are not only transforming natural language processing but are also revolutionizing how applications access external data. The Model Context Protocol (MCP) is an open standard that enables AI applications to connect to a wide ecosystem of data sources, tools, and services through a unified interface much like a USB-C port simplifies connections between devices.

What is MCP?

Introduced by Anthropic in late 2024, MCP bridges the gap between LLMs and external data. Traditionally, AI applications required unique integrations for each external tool or data source, leading to fragmented systems and redundant development efforts. MCP addresses this by providing a universal protocol that lets an AI model interact with multiple external systems through a single integration point.

Built on the established JSON-RPC 2.0 standard, MCP defines a clear lifecycle for client-server interactions, including initialization, regular operation, and graceful shutdown. MCP servers expose a set of capabilities such as tools, prompts, and resources that clients can invoke on demand. This standardization allows any compliant client to interact seamlessly with a server without needing additional modifications, offering a true plug-and-play experience.

The Challenges with Current Integration Methods

Today’s approach to connecting LLMs to various tools and services involves individually integrating each one using its specific API. This method poses several challenges:

- High Engineering Effort: Each connection requires manual work and constant maintenance.

- Fragmented Systems: Different data formats and communication protocols feel like “gluing” disparate systems together.

- Maintenance Nightmares: When an external service updates its API, it can break the entire integration chain, necessitating frequent updates.

Core Features of MCP

Tools

Tools are executable functions that LLMs can call to perform tasks ranging from simple calculations to complex operations like querying a database or booking travel. Each tool comes with a clear input schema and is automatically invoked based on the LLM’s understanding of the user’s request.

Example: A tool that adds two numbers or retrieves the latest commit details from a repository.

Prompts

Prompts are predefined templates that guide LLM interactions. They help structure conversations by framing user intent and ensuring consistency across multiple exchanges. Prompts can also be parameterized to fit various contexts.

Example: A prompt that instructs the LLM to generate a LinkedIn post based on provided keywords.

Resources

Resources supply raw data such as documents, images, or database schemas to ground the LLM’s responses. By integrating real-time data, MCP reduces hallucinations and improves the accuracy of outputs.

Example: A resource that fetches current weather data from an external API.

Client Features: Sampling and Roots

- Sampling: Allows servers to request LLM completions dynamically. This enables the client to select the best model based on criteria like cost, speed, and performance.

- Roots: Define the context boundaries provided by the client, such as sharing the current project directory from an IDE with its MCP server.

Solving the M×N Integration Problem

The term “M×N integration problem” describes the complexity of connecting M different clients (AI applications) with N different tools or services each requiring a unique integration. This approach results in M×N total integrations, which quickly becomes inefficient and difficult to scale.

MCP solves this challenge by:

- Eliminating Redundancy: A single MCP server can be accessed by many clients without needing unique integration code for each.

- Improving Consistency: Standardized communication reduces errors and inconsistencies.

- Enhancing Scalability: Transforming the integration model from M×N to M+N significantly lowers development overhead.

- Dynamic Context Management: LLMs can pull in real-time data, thereby improving response quality and interactivity.

MCP Architecture and Ecosystem

Architecture Overview

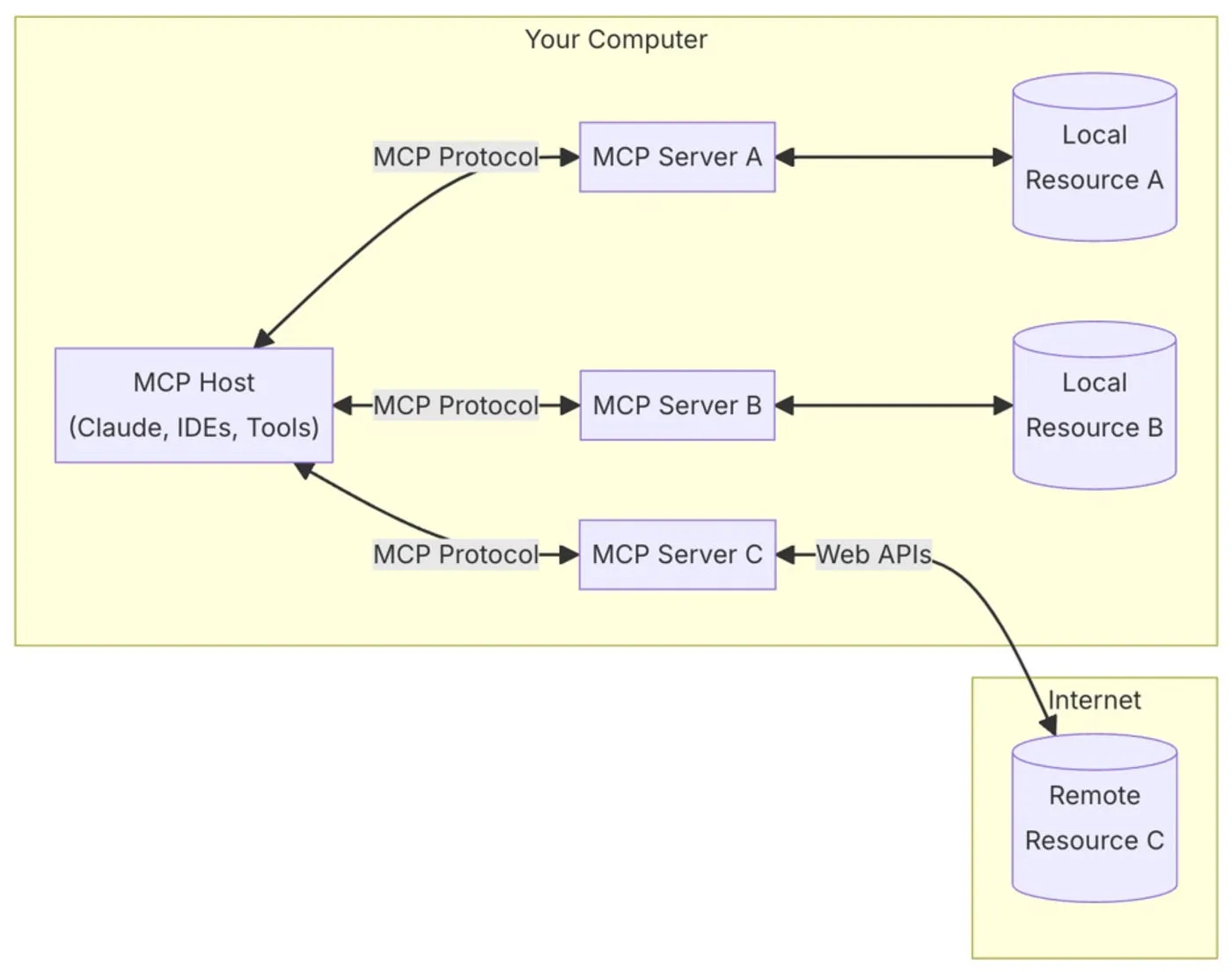

MCP follows a client-server model with three main components:

- Hosts: These are the primary AI applications (such as Claude Desktop or specialized IDEs) that initiate interactions.

- Clients: Components within the host that manage one-to-one connections with MCP servers, ensuring secure and reliable communication.

- Servers: Lightweight processes that expose external tools, prompts, and resources according to the MCP specification.

Source: modelcontextprotocol.io

Source: modelcontextprotocol.io

Supported Transports

MCP is transport-agnostic and can operate over several mediums:

- Stdio Transport: Ideal for local development, where the server communicates via standard input/output.

- HTTP with Server-Sent Events (SSE): Suitable for remote connections, using HTTP POST for requests and SSE for real-time notifications.

- Custom Transports: Developers can also implement additional layers, such as WebSockets, for full-duplex communication.

Enhancing LLM Models with MCP

Adopting MCP empowers LLMs to:

- Access Real-Time Data: Seamlessly integrate current external information for more accurate responses.

- Trigger Autonomous Actions: Perform tasks like creating repositories or sending emails directly.

- Minimize Hallucinations: Leverage rich, structured context from prompts and resources.

- Adapt Dynamically: Use sampling to adjust to changing contexts and user needs in real time.

Conclusion

The Model Context Protocol (MCP) marks a significant advancement in AI integration. By standardizing the way LLMs share context, invoke tools, and access resources, MCP simplifies integration efforts and empowers AI systems to perform dynamic, context-rich actions in real time. With support for multiple transports, a clearly defined client-server lifecycle, and a growing ecosystem of pre-built integrations and SDKs, MCP is poised to become the backbone of next-generation AI applications.