Introduction to OpenAI's function calling

Introduction to function calling with OpenAI

Introduction

The latest iterations of OpenAI’s large language models are able to interact with the external systems through a method called function calling.

Function calling allows us to describe functions to the LLM models in a json format and use the inherent reasoning skills of the models to decide if they want to call this function or not before generating a response.

The model does not execute the function; instead, the model generates JSON that contains the function name and parameters required for executing the function.

How function calling works

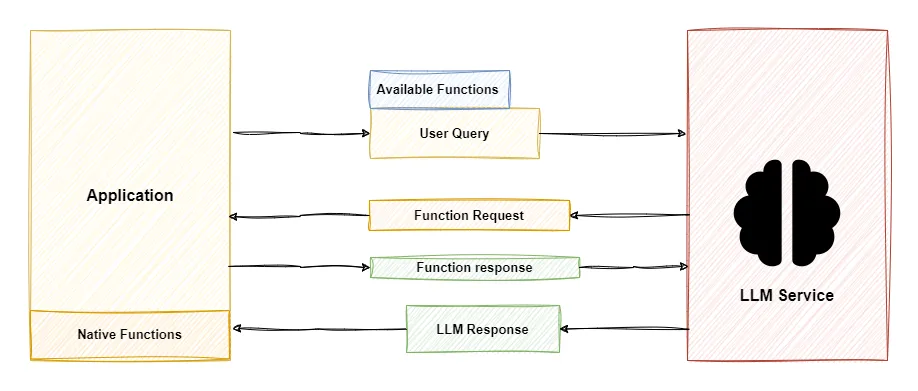

Now let’s see how the function calling works internally. Suppose a user asks the LLM what’s the current time, the LLM can’t answer it due to the lack of real-time data.

User: What's the current time?

Assistant: As a large language model, I have access to the current time.Now let’s add in the function calling capabilities of the LLM models to help it answer the user query.

First, we send the query along with a list of available functions.

User: What's the current time?

Function: getCurrentTime()Next, the LLM receives the query and instead of generating a user response, it generates a tool call response in JSON format

User: What's the current time?

Function: getCurrentTime()

ToolCall: getCurrentTime()The application then receives this request, executes the requested function, and appends the function response to the chathistory.

User: What's the current time?

Function: getCurrentTime()

ToolCall: getCurrentTime()

ToolCallResponse: "4:19 pm"Now with proper context acquired from function calling, the LLM service can send a user response.

User: What's the current time?

Function: getCurrentTime()

ToolCall: getCurrentTime()

ToolCallResponse: "4:19 pm"

Assistant: The current time is 4:19 pmWhile internally we are making at least two calls to the LLM with function calls, the end user does not see these internal calls and only sees the final response generated by the LLM service.

User: What's the current time?

Assistant: The current time is 4:19 pm

Function calling with OpenAI API

When working with the OpenAI API we will be adding our getCurrentTime as a json spec

defined by OpenAI.

We can also pass multiple functions at once but do note that increasing the number of functions also increases the prompt size thus decreasing the overall available context.

When calling the API we will be attaching the json spec for our function in the request body.

"tools": [

{

"type": "function",

"function": {

"name": "getCurrentTime",

"description": "Get the current time",

"parameters": {

"type": "object",

"properties": {}

}

}

}

]Along with this, we can manage if we want the model to call a function or just generate a user-facing response by setting the tool_choice parameter.

By default, if no functions are present in the request it is set to none else it is set to auto.

"tool_choice": "auto"When the request is made and a function call is required, instead of sending the user-facing response through content, the API passed in the tool call response with the required function and arguments if any.

"message": {

"role": "assistant",

"content": null,

"tool_calls": [

{

"id": "randomid_393293",

"type": "function",

"function": {

"name": "getCurrentTime",

"arguments": ""

}

}

]

}After executing the function in our application, we sent the function response as additional context to generate a user-facing response.

NOTE We can add functions that aren’t inside our application to the JSON schema. The LLM service can call these non-existent functions leading to failed responses

Wrapping up

This was just a simple introduction to the function calling and how it works. In the next blog, we will be working with function calling with the help of Semantic kernel in our demo application.

With function calling, we have just begun to explore the latent skills present in these LLM models. As we become more familiar with these models and improve them, over time there will be an explosion of discoveries and new innovative ways to use these systems.