Using Semantic Kernel with local embeddings

Using a local embedding service with Semantic Kernel

Introduction

Semantic Kernel is an open-source SDK that simplifies the integration of powerful Large Language Models (LLMs) like OpenAI and Azure OpenAI into our regular application. While the integrations offered by Semantic Kernel SDK are impressive, they can be easily extended to connect with local models without affecting our existing codebase too much.

There are various advantages to using a custom embedding service rather than a proprietary model. Having complete control over your frontend and backend services allows you to customize them to exactly match your use case.

Integrating with Semantic Kernel

In the previous blog we implemented a local embedding server that generates text embeddings for the provided input through RestAPI.

Now let’s integrate it within an application through the Semantic-Kernel SDK. We will be creating a new Console application for this demo.

The Semantic Kernel SDK allows us to integrate our custom embedding services by implementing the ITextEmbeddingGenerationService interface.

Once the project is created we will be adding the DTOs for the request and response to match our embedding API

public class EmbeddingRequest

{

public string Input { get; set; }

}

public class EmbeddingResponse

{

public List<List<float>> Data { get; set; }

}Now we will implement the ITextEmbeddingGenerationService interface in the LocalEmbeddngService.cs service.

Internally we are making an API call to the /embedding endpoint for generating an embedding and returning the output.

public class LocalEmbeddingService : ITextEmbeddingGenerationService

{

public IReadOnlyDictionary<string, object?> Attributes => new Dictionary<string, object?>();

public async Task<IList<ReadOnlyMemory<float>>> GenerateEmbeddingsAsync(

IList<string> prompt,

Kernel? kernel = null,

CancellationToken cancellationToken = default)

{

var requestBody = new EmbeddingRequest { Input = prompt[0] };

var serializedBody = JsonSerializer.Serialize(requestBody);

using var httpClient = new HttpClient();

httpClient.BaseAddress = new Uri("http://127.0.0.1:8000/");

var content = new StringContent(serializedBody, Encoding.UTF8, "application/json");

// Send a POST request to the "/embedding" endpoint with the content.

var response = await httpClient.PostAsync("/embedding", content, cancellationToken);

response.EnsureSuccessStatusCode();

using var responseStream = await response.Content.ReadAsStreamAsync();

var list = new List<ReadOnlyMemory<float>>();

try

{

// Deserialize the response stream into an EmbeddingResponse object.

var embeddingsResponse = await JsonSerializer.DeserializeAsync<EmbeddingResponse>(responseStream);

foreach (var item in embeddingsResponse.Data)

{

var array = item.Select(x => x).ToArray();

var memory = new ReadOnlyMemory<float>(array);

list.Add(memory);

}

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

}

return list;

}

}Additionally, we can also implement an extension method to easily add our service to the Kernel. This is similar to how we can add other services like AzureOpenAI or OpenAI with AddAzureOpenAITextEmbeddingGeneration or AddOpenAITextEmbeddingGeneration respectively.

public static class CustomKernelExtension

{

public static IKernelBuilder AddLocalEmbeddingGeneration(this IKernelBuilder builder)

{

builder.Services.AddKeyedSingleton<ITextEmbeddingGenerationService>(null, new LocalEmbeddingService());

return builder;

}

}Once set we can now start calling our embeddings API through the console app. Here’s a sample for generating an embedding through the console.

internal class Program

{

static async Task Main(string[] args)

{

// Create a kernel and add the local embedding generation module.

Kernel kernel = Kernel.CreateBuilder()

.AddLocalEmbeddingGeneration()

.Build();

// Get the required service for text embedding generation registered in the kernel.

var embeddingService = kernel.GetRequiredService<ITextEmbeddingGenerationService>();

Console.WriteLine("Enter text:");

var txt = Console.ReadLine();

// Generate embeddings for the provided text.

var response = await embeddingService.GenerateEmbeddingsAsync(new string[] { txt });

// Loop through each embedding value and print it on the console.

for (int i = 0; i < response[0].Length; i++)

{

Console.WriteLine(response[0].Span[i]);

}

}

}Before running the project make sure that your embedding server is up and running.

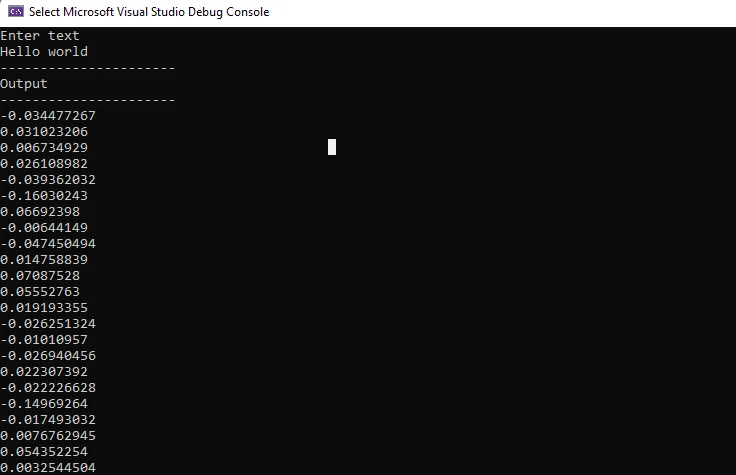

Once the console app is running enter some sample input like Hello world. If all the steps are followed we will see the embeddings generated through our local model as an output.

Great now we have a basic setup for generating embeddings. Here we are generating a single embedding for simplicity but in real-life scenarios we may be required to generate embeddings in bulk that can range from a few thousand to millions or billions of embeddings. So depending on our use case the implementation should change accordingly.