Getting Started with Semantic Kernel using .NET

Getting familiar with Semantic Kernel and its functionality

NOTE: The semantic kernel package is currently in pre-release and may introduce breaking changes before the V1 release

Semantic Kernel is an AI orchestration layer that makes it easier to combine your AI services with your applications allowing you to create brand-new workflows and user experiences.

In this blog, we will be getting familiar with the semantic-kernel dotnet library.

The Kernel

Everything in the semantic kernel revolves around the Kernel. The kernel acts as a container for all your AI services, plugins, and integrations.

Creating a kernel is as simple as.

Kernel kernel = Kernel.CreateBuilder().Build();However, the kernel is currently empty without any functions or services.

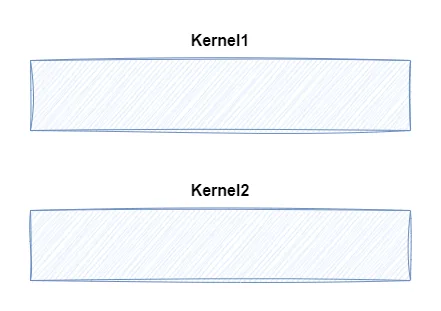

We can add services to the kernel using the CreateBuilder() before building it.

Each Build() call will create a separate instance of the Kernel.

IKernelBuilder builder = Kernel.CreateBuilder();

//AI services

Kernel kernel = builder.Build();

// Additional services

Kernel kernel2 = builder.Build();

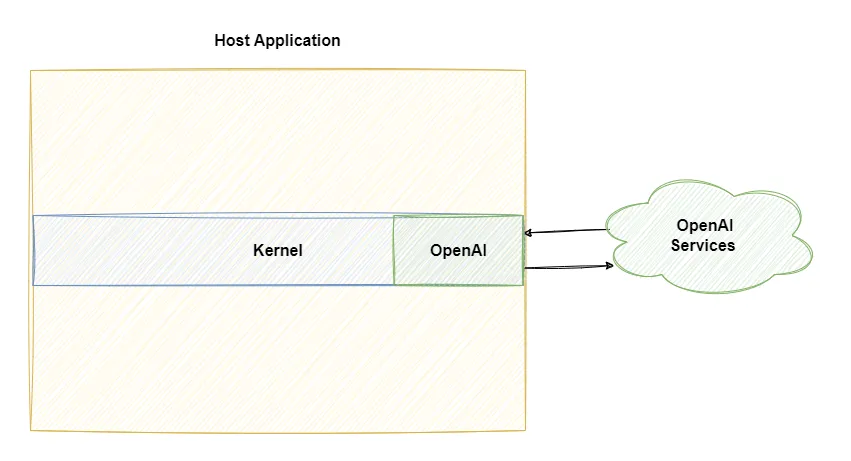

So let’s give the kernel access to AI services before building it. We can either add the OpenAI or Azure OpenAI service into our kernel or we can create an extension for adding our custom AI services.

Kernel kernel = Kernel.CreateBuilder()

.AddOpenAIChatCompletion(

"<Model ID>",

"<OpenAI API key>").Build();So now that we have a kernel instance with an AI service, we can start sending prompts to our AI services using the InvokePromptAsyc() function.

var response = await kernel.InvokePromptAsync("Tell me a joke?");Even though we now have an AI service connected to our applications, the generated responses will be based on the LLM model being used. Only the application can call the AI service but the AI service can’t interact with any function inside the application.

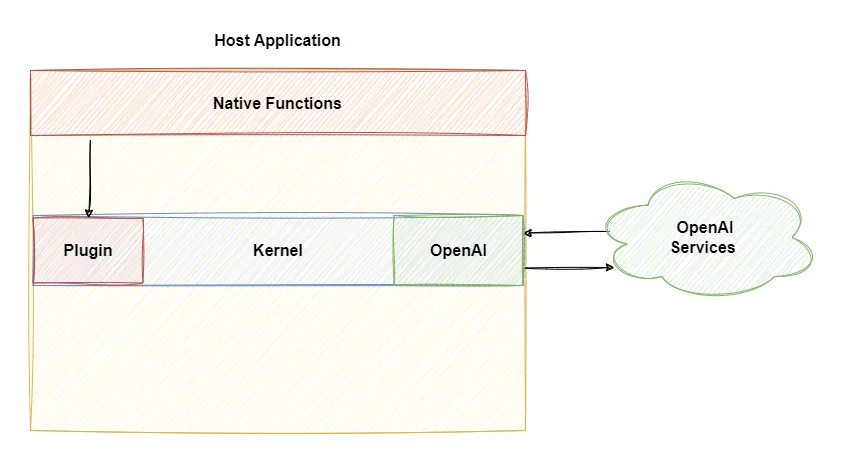

This is where the second half of the semantic kernel comes into play - The plugins.

The Plugins

While LLMs are good at generating natural language, they are not capable of reasoning or logic. Plugins are a group of functions that provide a toolset, that the AI services inherently lack.

Think of them as different features we want the AI services to have access to. They can be as simple as a Todo plugin or as complex as complete integrations with your solutions.

The kernel functions can be of two types:

- Semantic functions - They are prompt templates stored as inline strings or as a YAML file designed for specific tasks.

- Native functions - They are written in native code containing custom logic.

Here’s a simple native function for adding two numbers.

[KernelFunction]

[Description("Add two numbers")]

public int Add(

[Description("First number to add")]int num1,

[Description("Second number to add")]int num2)

{

return num1 + num2;

}The KernelFunction attribute is used for importing class methods as kernel functions when importing a plugin, while the Description attribute is useful for providing additional context to the AI services for function calling.

All kernel functions should have a short description of what it does for better results.

public class CalculatorPlugin

{

[KernelFunction]

[Description("Add two numbers")]

public int Add(

[Description("First number to add")]int num1,

[Description("Second number to add")]int num2)

{

return num1 + num2;

}

public int Subtract(int num1,int num2)

{

return num1 - num2;

}

}We can either add a class as a plugin while building the kernel or directly import it into an existing kernel.

KernelPlugin calculatorPlugin = kernel.ImportPluginFromType<CalculatorPlugin>();

We can now manually invoke the Add function using the InvokeAsync method through the kernel

KernelArguments args = new KernelArguments {["num1"] = 4, ["num2"] = 3};

int addResult = await kernel.InvokeAsync<int>(calculatorPlugin["Add"],args);With kernel now having access to the plugin we can now automatically call these functions for modifying prompts before sending them to the AI services like getting the current time or feed context in these prompts using a Memory plugin.

Planners are still in the experimental stage and may not be available in V1

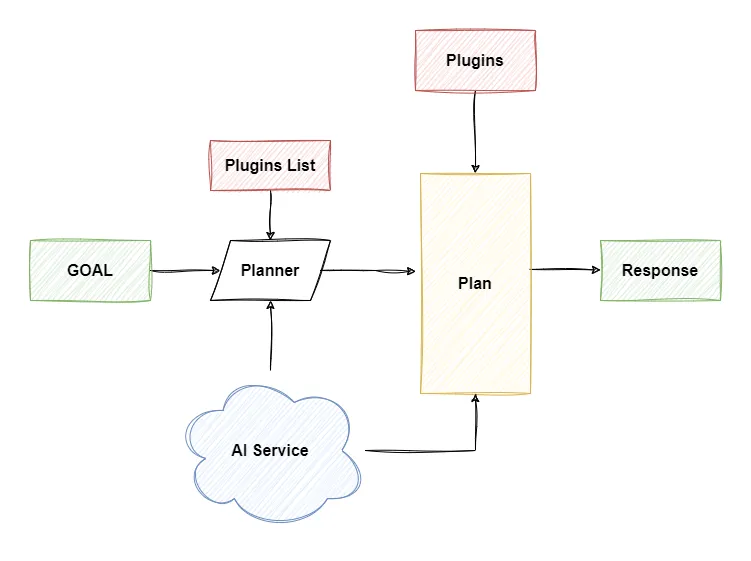

We can also use planners like Handlebars or step-wise planners for handling more complex workflows.

Planners are specialized classes that are built to generate an execution plan for AI to follow. The planner is provided a goal along with the available kernel plugins and with the help of AI, an execution plan is generated listing the steps and necessary plugin functions required to achieve the provided goal.

This helps us automate workflow and allows us to solve scenarios without coding them explicitly.

Conclusion

While Semantic Kernel is still in pre-release, it holds immense potential for developers looking to supercharge their applications with AI. With its modularity and flexibility, it can be used to create a wide range of experiences, from simple chatbots to complex intelligent assistants. As the technology matures and more plugins and planners become available, the possibilities will only grow.