What is Semantic Kernel

Introduction to Semantic Kernel the lightweight AI apps orchestrator using dotnet

Introduction

Semantic Kernel is an open-source SDK that simplifies integrating AI with your existing solutions. It’s the AI orchestration layer that allows combining the current state-of-the-art AI services with custom functions enabling user experience that wasn’t possible to code before.

Whether you’re using C#, Python, or Java, Semantic Kernel empowers you to unlock the potential of AI and enhance your applications with ease.

Here’s what makes Semantic Kernel stand out:

- Seamless Integration: Semantic Kernel lets you integrate AI services with existing applications and services boosting their functionality with just a few lines of code.

- Open-source: Being open-source, Semantic Kernel fosters an active community along with promoting accessibility and learning.

- Lightweight: Semantic Kernel is modular with minimal dependencies, allowing you to import only necessary modules making it incredibly lightweight

- Flexibility and Customization: Semantic Kernel gives you the freedom to add customized plugins, planners, and configurations to suit your specific needs. It’s built while taking into consideration the latest AI breakthrough allowing you to easily implement and utilize them with your existing code.

The Basics

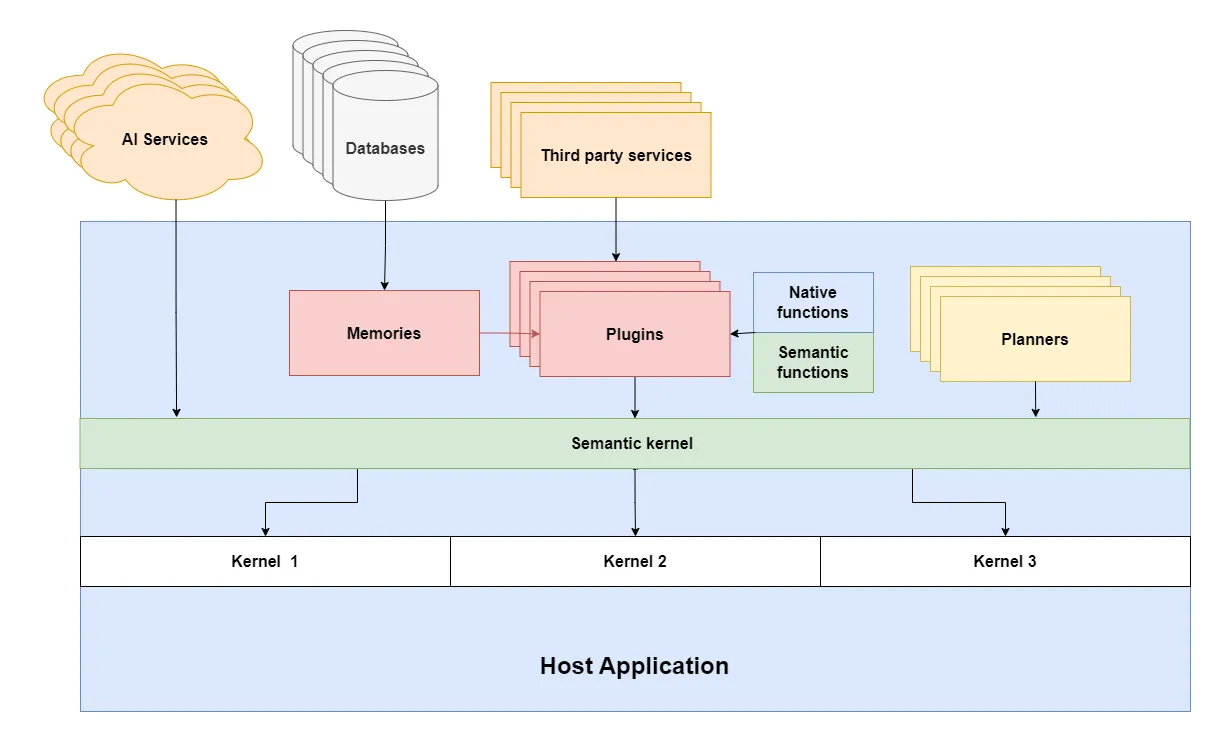

Now, let’s get familiar with the components of Semantic Kernel:

- Kernel The kernel is the core component that acts like an operating system for AI applications. It manages the configurations, contexts, functions, and services required for running your AI pipelines.

- Plugins: Plugins are the building blocks of your AI solution. They are a group of functions that can be exposed to the AI applications and AI services allowing them to access to data necessary for completing a given task.

- Functions in Semantic kernel: Semantic functions are prompt templates written in natural language that are sent to the AI services whereas Native functions are traditional functions written in C# or Python that can be called by the AI services through planners and function calling.

- Memories: Memories are specialized plugins for recalling and storing data. They provide the necessary context to your kernel during the execution process for your AI services.

- Planners: Planners can take a user’s goal and help us dynamically generate a plan that consists of the execution steps to achieve that specific goal. The planner uses an AI model to generate the plan based on the functions and services specified in the kernel.

This setup separates our implementation from our services allowing us to easily build specialized kernels using different combinations of plugins services and planners.

Here’s an example:

Suppose a user is using a chatbot integrated with a site. Whenever the user inputs something, the query is sent to the kernel responsible for the chatbot service.

The kernel is pre-configured with the required AI services, planners, and plugins that can be used to answer the query by the user. The plugins included can be as simple as functions for getting current date and time or as complex as complete 3rd party integration.

The planner generates a plan that includes the execution steps along with the necessary plugin functions, along with retrieving any relevant context like chat history, and previous memory if necessary to answer the user query.

Once the plan is successfully executed the final response is sent back to the user.

Conclusion

Semantic Kernel empowers developers of all levels to integrate AI into their applications and unlock its potential. With its modular approach, powerful capabilities, and supportive community, Semantic Kernel is the perfect tool to power your applications with AI.