Understanding Response Format Limitations: Why Llama, Phi & Mistral Models Struggle in Azure AI Studio

A comprehensive exploration of Response Format compatibility issues when working with Llama, Phi, and Mistral model families in Microsoft Azure AI Studio with Semantic Kernel, and how to effectively navigate these limitations.

What is Response Format in Semantic Kernel?

When building AI applications with Microsoft Semantic Kernel, developers often need to receive responses in specific structured formats to integrate with downstream processes seamlessly. Response Format is a powerful feature that allows developers to define the schema or structure of the output they expect from an AI model. This capability is essential for applications that require predictable, parseable data structures rather than free-form text.

In Semantic Kernel, Response Format enables developers to specify JSON schemas that models should follow when responding. This creates consistency in your AI application outputs and simplifies integration with databases, APIs, and business logic. However, not all AI models support this functionality, creating challenges when building applications that require structured data.

The Compatibility Issue

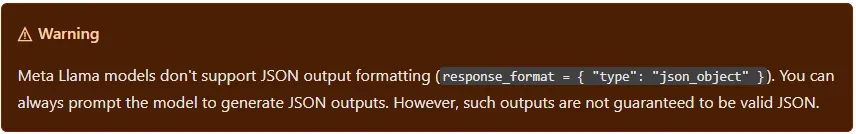

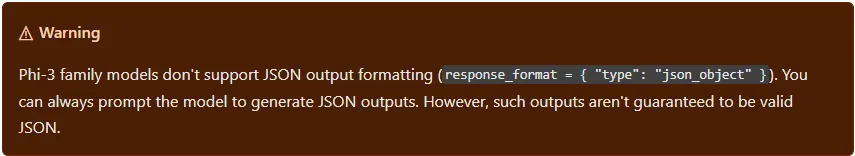

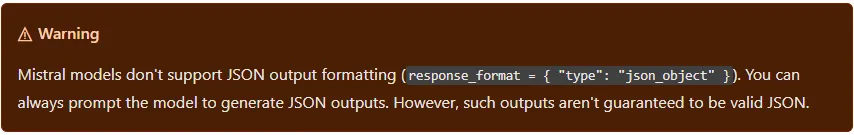

While Response Format is an extremely useful feature, it has a critical limitation when working in Microsoft Azure AI Studio: not all models support it equally. Working with Llama, Phi, and Mistral family models in Microsoft Azure AI Studio via Semantic Kernel has significant compatibility issues with Response Format specifications.

Key Problems Observed

When attempting to use Response Format with unsupported models in Microsoft Azure AI Studio through Semantic Kernel, you’ll typically encounter one of two problematic outcomes:

- Random or broken responses: The model might return malformed content, incomplete data, or completely irrelevant outputs.

- Request timeout: After waiting for the full timeout duration, you receive no response, wasting valuable processing time and potentially disrupting your application flow.

Microsoft’s official documentation acknowledges this limitation. As shown in the image below, Response Format is explicitly listed as unsupported for these model families when using Microsoft Azure AI Studio.

This limitation exists even though the execution settings interface allows you to specify a Response Format parameter for these models. This inconsistency can be particularly confusing for developers who might assume that if a parameter can be set, it should work with all available models.

Recommended Solutions

Solution 1: Use Compatible Models

The most reliable solution is to use models that fully support Response Format functionality:

- OpenAI GPT Models

- Google Gemini Models

Using these models ensures consistent, reliable structured outputs that conform to your specified JSON schemas.

var prompt = "Generate product metadata in JSON";

var kernel = Kernel.CreateBuilder()

.AddOpenAIChatCompletion("gpt-4", apiKey)

.Build();

var settings = new OpenAIPromptExecutionSettings {

ResponseFormat = "json_object",

//-----------OR-----------

ResponseFormat = typeof(YourModel),

Temperature = 0

};Solution 2: Structure-Encouraging Prompts

You can attempt to guide the model toward structured outputs through careful prompt engineering:

Please provide your response in the following JSON format:

{

"recommendation": "string",

"reasoning": "string",

"confidenceScore": number

}

Make sure to maintain a valid JSON structure and include all fields.Important limitations of this approach:

- No guarantee of consistent output format

- May require additional validation and error handling

- Format adherence may degrade with complex reasoning tasks

- Can consume more tokens in your prompt

Conclusion

Understanding the limitations of Response Format support across different model families is essential when developing applications with Microsoft Azure AI Studio and Semantic Kernel. While Llama, Phi, and Mistral models offer compelling capabilities in many areas, their lack of Response Format support requires careful consideration and potentially alternative approaches to ensure your application functions reliably. By being aware of these constraints and implementing appropriate workarounds or model selections, you can still leverage the full power of Azure AI Studio while avoiding unexpected failures related to Response Format specifications.