What is Prompt Engineering

Designing effective prompts for emerging AI-powered applications.

Yash Worlikar Mon Jan 15 2024 4 min read

Yash Worlikar Mon Jan 15 2024 4 min read Introduction

Due to the continuously evolving landscape, the AI field has seen lots of advancements and breakthroughs in the current era.

We have seen language models like GPTs, and Llamas and image generation models like Dalle-3 and stable diffusions rise in popularity through record-breaking speeds. Due to this boon, various new fields have emerged to meet the current demands.

Prompt engineering is one such emerging field that involves designing effective and relevant prompts for these AI models. Prompts are just any inputs that you give an AI model to generate outputs. It’s a technique of selecting the right instructions and formats that guide these models toward generating accurate and desired outputs.

Why is prompt engineering necessary?

Prompts can greatly affect the outputs generated by the AI models. They can be simple or complex, depending on the task and the desired output.

For example, the following prompts produce very different outputs:

Prompt: Tell me about Dinosaurs.

Output: A long essay about Dinosaurs.

Prompt: Tell me about Dinosaurs in brief.

Output: A short paragraph about dinosaurs.

Different AI models have different capabilities, strengths, and weaknesses, and may require different types of prompts. For example, some models are better at generating natural language, while others are better at generating code or images.

Even the prompt structure can vastly differ from one model to another. The structure depends on the dataset the models were finetuned or trained on. So what works on one model may start giving nonsensical answers while using a different model.

Prompt engineering isn’t just about writing the prompts, it also involves choosing the right LLMs, setting the appropriate parameters, and evaluating and improving the outputs suitable for your use case.

What makes prompt engineering so effective?

The main reason prompt engineering is so effective is rooted in how these models are designed and function.

When you input a sentence or a sequence of words into the model, it goes through several layers of processing within the model. At some point, the input gets transformed into a numerical representation in the model’s internal structure. This representation is in a latent space, hidden from direct observation.

The key idea behind the latent space is that similar inputs or concepts should be closer to each other in this space. This allows the model to perform various tasks like language generation, translation, summarization, etc., by manipulating this latent space representation.

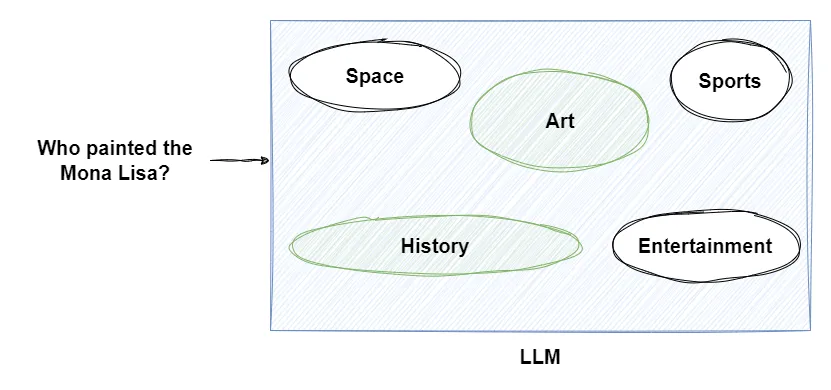

Internally the models have formed local regions around with sentences and words that are closely related to each other. For example, a language model may consist of a complex representation of patterns, ideas, concepts, and domains that are present in natural language.

Using specific words and patterns within our input steers the LLM’s responses close to those ideas and concepts thus giving us more relevant results. So in a sense, we are programming the prompts to generate a desired output only using natural language.

For example, if we asked an LLM about Who painted the Mono Lisa?, the regions related to art and history would be activated since they are the most closely associated with the query, while the other regions such as sports and space would be ignored

Limitations of prompt engineering

While prompt engineering is a powerful technique, it does come with its limitations. One significant constraint is its dependency on human-crafted inputs. While this helps us reduce hallucinations, it does not eliminate them.

These prompts may also introduce biases, inaccuracies, or unintentional constraints that affect the model’s outputs. Additionally, prompt engineering might not always guarantee the desired output, especially when dealing with complex or abstract tasks. Different models can struggle with understanding context, nuances, or ambiguity in prompts, leading to inaccurate or irrelevant responses.

While prompt engineering may help in some cases, it’s heavily dependent on the capabilities of the model. Specializing in a generative AI model for domain-specific requires finetuning.

Moreover, as AI models evolve and get updated, once-effective prompts might become obsolete or less effective. Keeping up with these changes and continuously optimizing prompts for updated models can be a significant challenge.

Conclusion

Prompt engineering remains a critical skill for anyone working with the current generative AI models, offering a powerful way to guide and shape their outputs. However, it’s important to acknowledge its limitations and be mindful of the potential biases and constraints introduced through crafted inputs.

As AI evolves, prompt engineering strategies will also need to adapt to ensure continued effectiveness in harnessing these powerful models.